Though we are fast moving to a cloud-focused world, I still thought we’d look at an old school solution. I was recently doing some work in a Linux environment which had multiple Essbase cubes and I had to do something simple, set up backup exports for these cubes.

To do this, all we need is a shell script and a MaxL script. The shell script might call a simple MaxL script like this:

export database App1.Db1 Level0 data to data_file "Export_Location//App1_Db1.txt"; export database App1.Db2 Level0 data to data_file "Export_Location//App1_Db2.txt"; export database App2.Db1 Level0 data to data_file "Export_Location//App2_Db1.txt";

…and so on, for each of the databases that we need to back up. But if we have a large number of cubes, it becomes a bit clunky and more prone to error. And in my case, we did have a lot of cubes. So how do we make it a bit more seamless for the administrator?

This is where working in a Linux environment is great. The scripting tools that you have in a Unix-flavored environment are more superior than their counterparts in Windows (just my opinion).

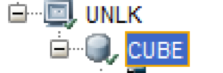

For this example, I’ve created an application and a database.

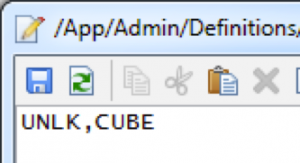

We also need a simple text file…let’s call it our “definition” file. I’ve typed in the name of the application I would like to back up and the name of the database. Remember, Linux is case-sensitive, so make sure you have the correct case.

Note: make sure to hit the enter key after the database name (the script needs the carriage return to understand that the end of the file has been reached).

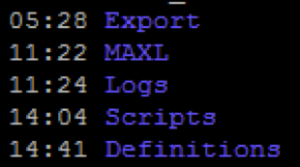

I’ve placed it in a “Definitions” directory on my server. The entire directory structure looks like this:

Yes, I know, I’ve got the wrong case for “MaxL”. Other than that, the purpose of each directory should be self-explanatory.

The MaxL script that we will use, is also pretty simple (never mind the encryption keys). You might want to add labels, disconnects, error trapping etc.

/* Variables: $1=Essbase Server $2=Application Name $3=DB Name $4=Log location $5=Export location */ spool on to "$4//Nightly_Export_$2_$3.log"; set timestamp on; login $key 00000000 $key 4000000 on $1; export database $2.$3 Level0 data to data_file "$5//$2_$3.txt"; spool off; logout; exit 0;

The positional parameters $1-$5 will be passed through from our script. Speaking of which, I also have a shell script in place. The script should read through the definitions in the file and cycle through each database and create exports. The snippet below is what allows us to do this in an elegant manner.

while IFS=',' read -r APP DB; do

sh $MAXLSH/startMaxl.sh -D $MAXLDIR/Nightly_Export_1.maxls $KEY $ESS_SERVER $APP $DB $LOGDIR/Essbase_Daily $HOMEDIR/Export/ESSBASE_LEV0_DATA/${DATESTAMP}_Export 2>&1 >> $ESSBASE_DAILY_LOG

done < $DEFDIR/ESSBASE_BACKUP_2.txt

To simplify the code:

- The “while” loop reads through each line in the definition file.

- It understands that “,” is the delimiter being used and it splits the commas-separated values into 2 variables $APP and $DB.

- The MaxL shell is then invoked. MaxL takes the positional parameters $2 and $3 to be the application name and the database name. It then proceeds to create exports.

- Until it reaches the end of the file (the carriage return from earlier).

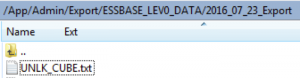

When you run the script, you should see the export in the export directory.

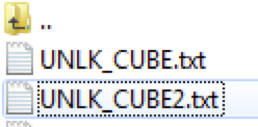

If you need to add other databases to your list, all you need to do is add them to your definitions file. Like so:

No updates are needed to the shell script or the MaxL script. It should generate exports for the second database as well.

The script, in its entirety looks like this:

#!/bin/sh

## Set variables

HOMEDIR=/App/Admin

DEFDIR=$HOMEDIR/Definitions

LOGDIR=$HOMEDIR/Logs

ERRORDIR=$HOMEDIR/Errors

MAXLDIR=$HOMEDIR/MAXL

DATESTAMP=`date +"%Y_%m_%d"`

ESSBASE_DAILY_LOG=$LOGDIR/${DATESTAMP}_Essbase_Daily.log

APPDIR=/App/Oracle/Middleware/user_projects/epmsystem1/EssbaseServer/essbaseserver1/app

MAXLSH=/App/Oracle/Middleware/user_projects/epmsystem1/EssbaseServer/essbaseserver1/bin

KEY=0000000,9999999

ESS_SERVER=UNLK.CUBE.COM

## Create directory with a date stamp

echo "*******************************************************" >> $ESSBASE_DAILY_LOG

echo Essbase Backup Started on `date` >> $ESSBASE_DAILY_LOG

echo "*******************************************************" >> $ESSBASE_DAILY_LOG

echo -e '\n' >> $ESSBASE_DAILY_LOG

mkdir -pv $HOMEDIR/Export/ESSBASE_LEV0_DATA/${DATESTAMP}_Export >> $ESSBASE_DAILY_LOG

echo -e '\n' >> $ESSBASE_DAILY_LOG

echo "Export process begins." >> $ESSBASE_DAILY_LOG

echo -e '\n' >> $ESSBASE_DAILY_LOG

## Loop through and create exports

while IFS=',' read -r APP DB; do

sh $MAXLSH/startMaxl.sh -D $MAXLDIR/Nightly_Export_1.maxls $KEY $ESS_SERVER $APP $DB $LOGDIR/Essbase_Daily $HOMEDIR/Export/ESSBASE_LEV0_DATA/${DATESTAMP}_Export 2>&1 >> $ESSBASE_DAILY_LOG

done < $DEFDIR/ESSBASE_BACKUP_2.txt

echo -e '\n' >> $ESSBASE_DAILY_LOG

echo "Export process ends." >> $ESSBASE_DAILY_LOG

echo -e '\n' >> $ESSBASE_DAILY_LOG

echo "*******************************************************" >> $ESSBASE_DAILY_LOG

echo Essbase Backup Ended `date` >> $ESSBASE_DAILY_LOG

echo "*******************************************************" >> $ESSBASE_DAILY_LOG

echo -e '\n' >> $ESSBASE_DAILY_LOG

exit

So there you have it, a quick and easy way to variable-ize your automation scripts. I’ll add a follow up post over the next couple of weeks, which will take this scenario one step further.

EDIT: Part 2 can be found here.